TAKEAWAYS

Artificial Intelligence (AI) is no longer a future promise – it is already transforming the finance and audit profession. A recent global study of Chief Financial Officers (CFOs) and finance executives found that 71% of companies are using AI in finance, with 41% doing so at scale. The opportunities are vast: resilience, sharper insights and faster decisions. But the risks are just as real: regulatory complexity, ethical concerns, wasted investments and the potential erosion of trust in financial reporting.

The finance and audit profession is at a turning point. These roles must evolve from being custodians of historical accuracy to becoming leaders in AI adoption, risk management and governance. This article explores how finance and audit professionals can seize the opportunities of AI, manage the risks and embed accountability, transparency and trust into every stage of the AI journey.

Traditionally, finance and audit professionals have been stewards of financial insights, compliance and internal control. Much of their work leveraged on manual processes, structured data and retrospective analysis. AI changes this equation. Agentic AI – the latest evolution of AI systems – is reshaping the profession in fundamental ways.

Beyond automation, AI is also changing the nature of professional judgement. While human oversight remains essential, finance and audit professionals are increasingly expected to interpret AI-generated insights, assess model reliability and ensure that automated decisions align with ethical and regulatory standards.

In short, the profession is pivoting from a historical data focus to generating forward-looking intelligence and taking on responsibility over risks, data governance and AI accountability.

Agentic AI represents a new class of systems designed to autonomously or semi-autonomously fulfil objectives. These agents can execute tasks based on predefined triggers such as periodic scheduling, data updates or human prompts, and can interact with other agents and make decisions with minimal human input.

Visualise a dynamic finance department in a large corporation, where AI agents could transform processes in the following ways:

With routine tasks automated, finance professionals can prioritise strategy and innovation. While Agentic AI presents many opportunities, it does introduce new risks:

For highly regulated industries like financial institutions, these challenges strike at the heart of what matters most: accountability, transparency and trust.

Despite the challenges, business cannot afford to slow down AI adoption. Finance professionals should aim to be familiar with various international frameworks, which have been established as industry points of reference:

Locally, Singapore’s AI strategy focuses on public good, trust and safety while offering flexible governance frameworks instead of strict laws. Specialised resources like the Model AI Governance Framework for generative AI and Responsible AI Framework in Accountancy by ISCA and NTU, initiatives like AI Verify, and testing tools like Project Moonshot, help organisations manage risks and innovate responsibly. While anticipating potential regulatory changes and societal expectations, finance professionals could understand and use these benchmarks to assess AI systems and ensure their organisations comply with emerging best practices.

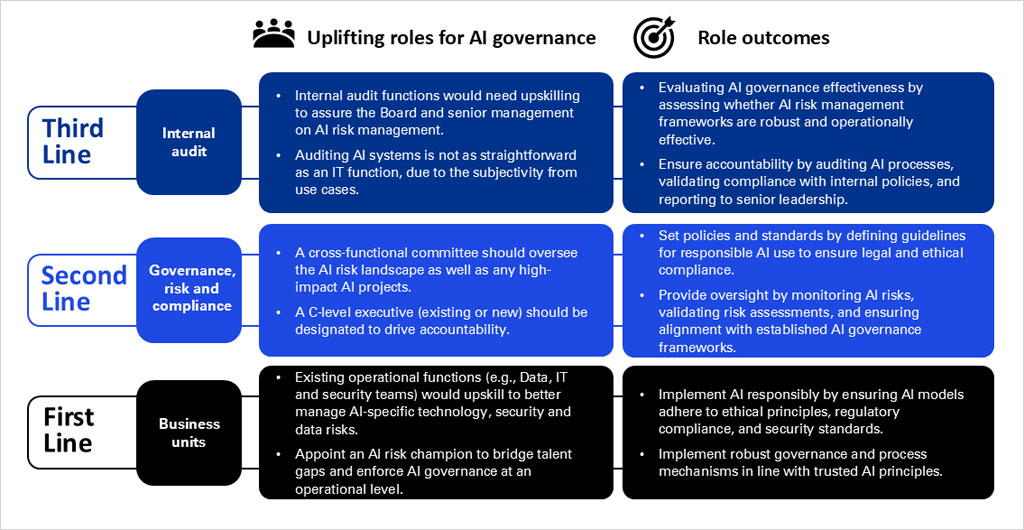

How can organisations translate frameworks into action? The Three Lines Model provides a strong foundation and offers a practical structure (Figure 1). Together, these lines form a coordinated and layered defence that ensures AI governance is embedded throughout the organisation, with finance and audit professionals playing critical roles at every level.

Figure 1: Adapting the Three Lines Model to support AI governance

In this ecosystem, finance and audit professionals serve as the operational and assurance arms of AI governance. Their collaboration with boards and CFOs ensures that AI adoption is not only innovative but also responsible, transparent and aligned with the organisation’s values.

Boards play a critical role in setting the strategic direction for its adoption. Boards must ensure strong governance, effective risk management and alignment with long-term business goals. Through their oversight, boards establish the tone at the top and reinforce the importance of ethical and responsible AI use across the organisation.

CFOs are also key enablers of AI transformation. In a recent survey of 100 CFOs, 70% identified AI and GenAI as the most crucial technologies for supporting the finance function’s strategic decision-making. With their deep understanding of financial reporting and risk, CFOs can champion AI initiatives that enhance performance while safeguarding integrity.

Accountants must now build AI literacy to understand how AI tools impact financial processes, validate AI-generated outputs and ensure data quality. By collaborating with data scientists and IT teams to align AI tools with financial reporting standards and internal controls, they help ensure that financial information remains reliable and decision-useful, even in an AI-driven environment.

Auditors, meanwhile, must adapt their methodologies to assess AI systems and controls. This includes evaluating algorithmic risks, testing the integrity of AI-generated data and providing assurance that AI use aligns with ethical and regulatory expectations. As AI becomes more embedded in business operations, internal auditors are uniquely positioned to offer independent assurance over the design, implementation and monitoring of AI systems.

AI is not just changing the tools of finance, it is redefining the profession itself. Finance and audit professionals must no longer be passive recordkeepers or compliance checkers. They must become AI risk managers, ethical gatekeepers and strategic enablers of responsible innovation.

The path forward is clear – accountability must be embedded across all levels of an organisation, reinforced with governance that must be proactive, so that the trust earned is intentional. International and local frameworks already provide the guideposts, but it is the finance and audit professionals who must put them into practice. By prioritising AI literacy, strengthening internal controls, and championing responsible practices, finance and audit professionals can play a pivotal role in fostering an environment where AI upholds and advances financial integrity.

Edmund Heng, CISA, ICP, is Partner, Technology Risk, KPMG in Singapore; Wei Li Tea, CA (Singapore), is Partner, Risk, KPMG in Singapore; and Gary Teo, CA (Singapore), is Head of Internal Audit, Airwallex.